Introduction¶

When dealing with colour images, many image processing operations assume that the colour channels can be either a) processed independently or b) converted into greyscale. Basically, the assumption is that an image is either black and white or that it can be treated as three separate black and white images. For a lot of operations, this is actually pretty reasonable. For example, when you’re blurring an image, the underlying math doesn’t care if an image is colour or greyscale. Things start breaking down when the math does care.

Pixel Values¶

A digital image is essentially a \(\mathrm{height}\times\mathrm{width}\) grid where each element \(I[x,y]\) contains a single value. For a greyscale image, each cell contains only a single value. In most cases [1] the image values range between 0 and 255 in discrete steps. That’s because for 8-bit images, the maximum possible value is \(2^8-1 = 255\).

Things are a little bit different for colour images because each pixel now has multiple values associated with it. The easiest way to think about it is that a typical red, green and blue (RGB) image are three separate images joined together. That means that each pixel at position \([x,y]\) contains a triplet instead of just one value. So, a bit more formally, a greyscale image is \(I[x,y] = c\), where \(0 \le c \le 255\). A colour image is \(I[x,y] = (r,g,b)\) where \(0 \le r, g, b \le 255\).

Wikipedia provides a good overview of this on their Grayscale page.

From Colour to Greyscale¶

Okay, so that still doesn’t answer the question, “why do we care?” The short answer is that sometimes we want to be able to compare different parts of the same image. We usually do this by looking at pixel values and seeing the difference between a pixel at \([x_1, y_1]\) with a pixel at \([x_2, y_2]\).

Things get a bit more interesting when you consider how an RGB image becomes a greyscale image. In theory, the conversion is pretty simple. And it is. The grey value of any pixel is just a weighted sum of its RGB values [2]. The default in OpenCV [3] uses weights that preserve the relative “brightness” of the colours and is given by

The reason why this variable is labelled \(Y\) is because it comes from the YUV/YCbCr colour representations.

Anyway, the key thing is that a colour to grey conversion is a dot-product between two vectors. Or, put another way, the projection of a 3D vector space onto a 1D subspace. Or, minus the math-speak, taking three separate values and reducing it into a single value. However you put it, you’re taking a bunch of numbers and turning it into a single number. By definition, you’re loosing some information, and this is where weird things start happening.

When Things Break¶

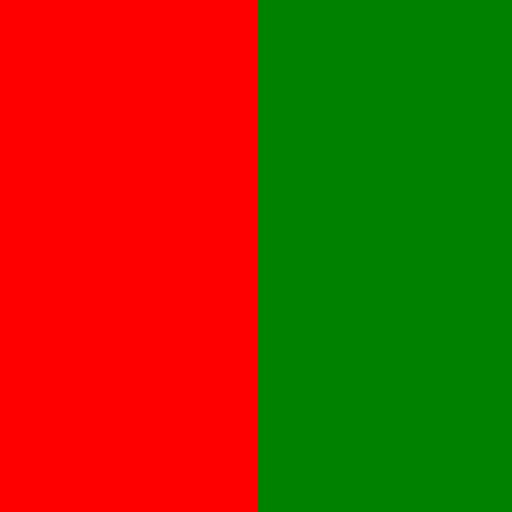

Consider this image:

An image with red on the left side, green on the right side.

One side of it is a dark red and the otherside is a dark green. There’s an edge running right down the middle of it. Now, consider loading that image and converting into greyscale using the following code example:

1 2 3 4 5 6 7 8 9 10 11 | #include <opencv2/imgproc.hpp>

#include <opencv2/imgcodec.hpp>

int main(int nargs, char **args)

{

cv::Mat img = cv::imread(args[1]);

cv::Mat grey;

cv::cvtColor(img, grey, CV_BGR2GRAY);

cv::imwrite(args[2], grey);

return 0;

}

|

The output is this image:

Resulting greyscale image.

That’s not a glitch. Everything’s working exactly as it’s supposed to. It’s just that the red and green values were chosen so that after converting to greyscale, you get the exact same grey value.

So, what happened? Well, let’s set the blue value to zero. That means that we just need to worry about the red and green values. This also lets the colour to greyscale equation become

What we want to do is now figure what values of \(R\) and \(G\) will give us the exact same value of \(Y\). To do this, processing algorithms are essentially colour blind?we need to see what’s the largest value that \(Y\) can have. For a reason that will become (hopefully) obvious shortly, the largest value of \(Y\) that can be made from red and green is

So, we need to produce a value of 76. Figuring out what the red and green values will need to be can be done by solving a system of simultaneous equations. We set it up like so:

To keep things simple(r), let \(R_1 = 0\) and \(G_2 = 0\). That means that

Finding the values is just a matter of dividing 76 by the appropriate value and then rounding down. In other words,

Now, the reason for choosing \(Y=76\) should be a bit more apparent. The colour values have to be between 0 and 255 (or some predefined maximum value) so choosing the larger of the two would mean that \(R_2 = 500\) [4].

What can we do?¶

Okay, so by now it should be obvious that converting an RGB image into a greyscale image has…problems. Does that mean that things are hopeless? That we just have to accept the ambiguities that come when converting to greyscale? Well, no, we don’t. And the fact is that dealing with colour isn’t particularly difficult.

The rest of this document/website will discuss one way to use colour information to find image edges. There are others and it’s a topic that has been studied a fair bit. This project was primarily inspired by the work of Lukac et al. on colour image filtering using vector order statistics [lukac2005]. The section on Colour Gradients is closely related to the work by [scharcanski1997], which also has some basis in vector order statistics. There are other approaches, including ones based more on calculus then on statistics, but those won’t be discussed here.

Footnotes

| [1] | Actually, that’s not quite true since the maximum value depends on a number of things, such as how the image was captured and stored. A good example are RAW images: the pixel values will be between 0 and whatever the maximum bit-depth used by the camera’s sensor. Images can also be represented using floating-point numbers and in that case they usually are between 0 and 1. |

| [2] | The way that you choose the weights can have an effect on how the final greyscale image appears. That’s what the adjustment sliders in Adobe Lightroom do, for example, in their “black & white” panel. |

| [3] | See the “RGB <–> GRAY” section in the Color Conversion in the OpenCV documentation. |

| [4] | Proving this is left as an exercise for the reader. |